| 2024/04/16 Comparing and Buying a 1.5 ATA to 2.0 ATA Hypberabic Soft Chamber (HBOT), and Settled with Olive 2.0ATA Soft Lying Type Hyperbaric Chamber | |

|

π

2024-04-16 23:10

by Merlin

in Hbot

About MeFirst, I'd to state that I’m an engineer. I’m not a salesperson, I’m not a lawyer, and I’m not a doctor. I wrote this page as a result of my personal research since I spent quite a bit of time looking at vendors and available soft chambers when I decided to get one of my own, talked to sales people and engineers from multiple companies to answer the pointed questions I had on their equipment and why I should trust it (soft chambers not blowing up) as well as whether you could convince me that their concentrator and inflator was sized sufficiently to actually provide 95% O2 inside a fully inflated soft chamber. As I expected, unfortunately some solutions cheapened out on the concentrator and did not take into account that under pressure in the chamber, it would fail to deliver the full amount required for breathing at up to twice the liters per minute. I live in california, most of this document will be useful to you elsewhere, especially if you are interested in the Olive 2.0 ATA soft chamber, but you will have to find your own local vendor. Do you need HBOT, will it cure xxx?This is a very long topic, I did a lot of my own reading and watching videos on the topic but I will not summarize them here, it would be too long and off topic. I’ll simply say that there are plenty of off label (not FDA verified) HBOT treatments that seem to help healing from various things, including long covid, and some recent studies seem to indicate that HBOT can help with general health and longevity:

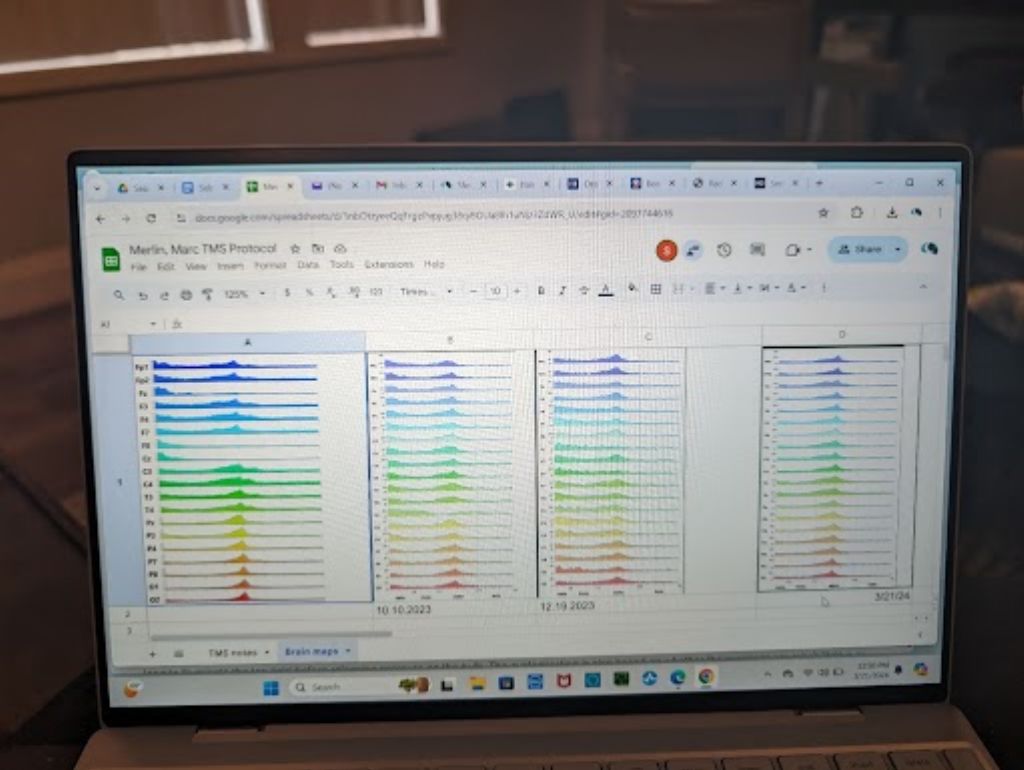

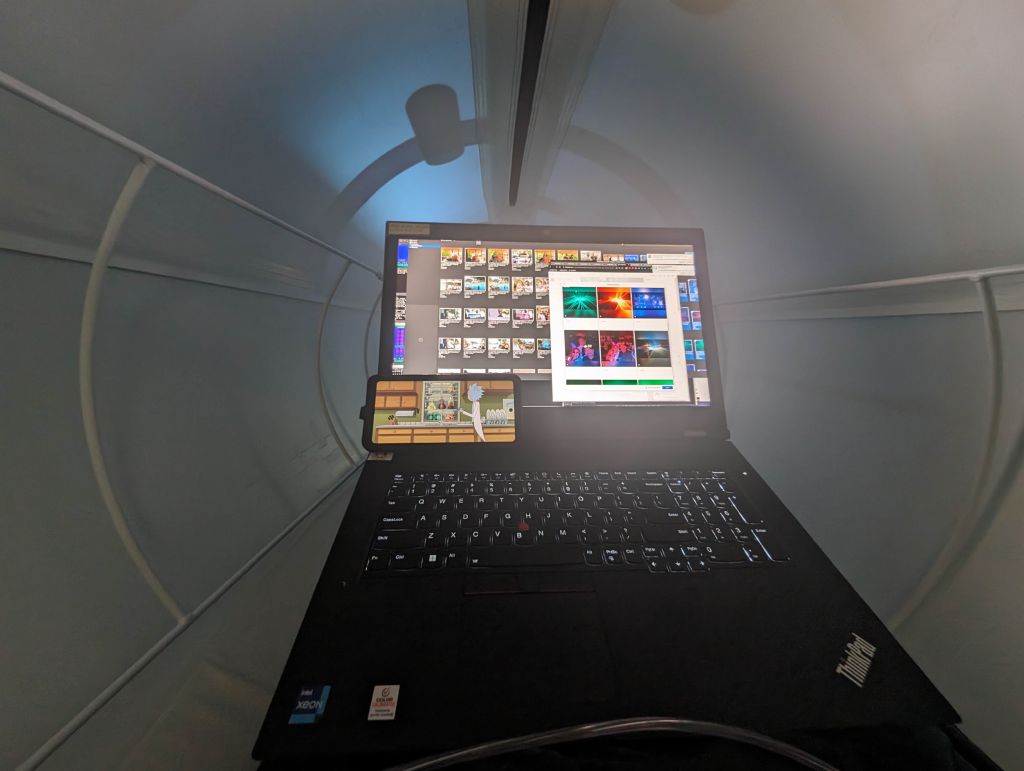

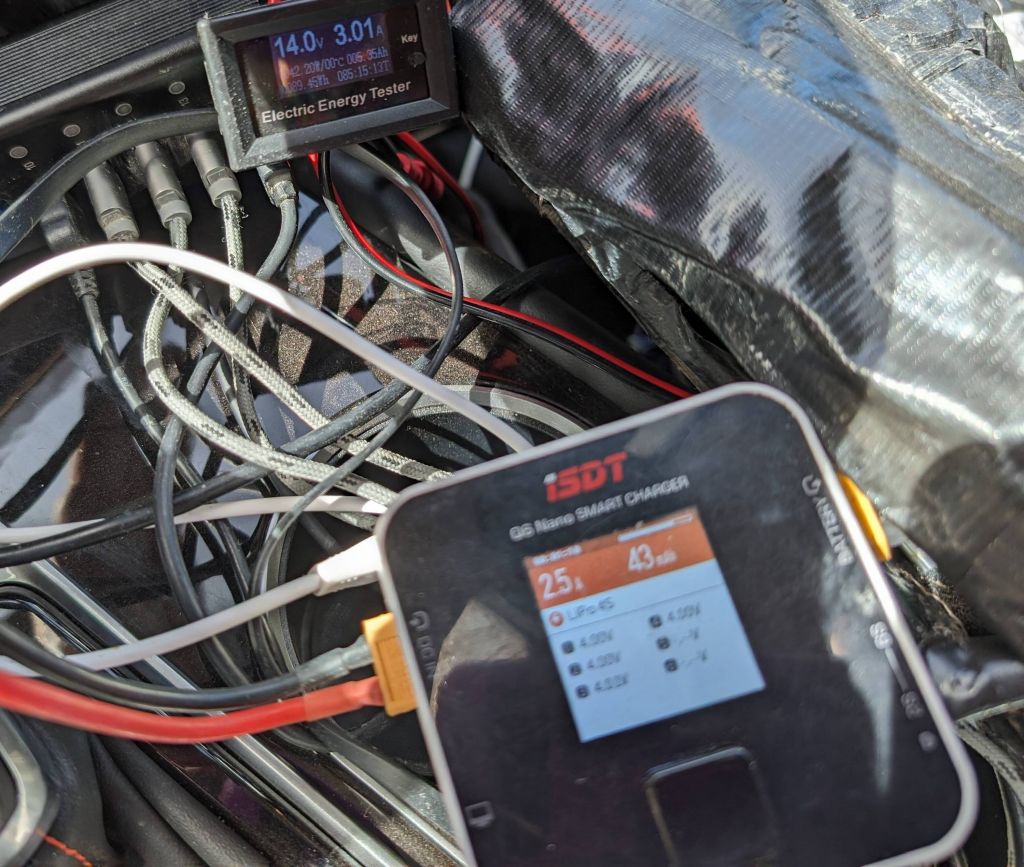

Hard, soft, 1.3ATA, 1.5ATA, 2.0ATA?Hard chambers allow for pressures of 2.0ATA or above, and are the original chambers used for FDA approved treatments. Note that most treatments people are seeking to get are not FDA approved and therefore haven’t been carefully studied to work better at 2.0 ATA than let’s say 1.5 ATA. 1.3 ATA was the original number for soft chambers, some claim it’s the only “approved” pressure by the FDA. I have asked every time for supporting documentation of this, never got any from anyone. Either way, the FDA does not currently regulate soft chambers. You could make a point they should as soft chambers can be dangerous if they explode, but as of right now I have found nothing that says they are. I have heard of at least one instance of an FDA approved 1.3 ATA chamber bursting because it was poorly built, so there you go. If you’re still worried that somehow 1.3 ATA chambers are the only FDA approved ones, many 1.3 ATA chambers are not actually FDA approved, and if 1.5 ATA or above was somehow illegal, which I have found nothing to say they are, this would be liability on the seller, not on you, the buyer. If sellers have figured out that it’s legal for them to sell 1.5 or 2.0 chambers to you, that’s honestly all you should care about. That said, I am not a lawyer, those statements are my opinion, not legal advice or fact. Hard chambers are obviously more solid and hopefully better built, but they also cost tens of thousands of dollars which was more than I was willing to pay, especially now that I found a 2.0 soft chamber for a lot less money. If you are getting FDA approved treatments that require 2.0 ATA or more, I would go with a hard chamber and get treatments paid by insurance. If you are getting off label treatments that you have to pay for, I would get them in a cheaper soft chamber (where I live in silicon valley, soft chamber was $105-$120/h and hard chamber was $250/h) This page explains why with 1.5ATA you are getting a fair amount of the benefits of 2.0 ATA: https://drantone.com/442649809/452521397 but at the same time, it’s still true that you’ll be getting more with a 2 ATA chamber in most cases, so that page was to encourage soft chamber treatments as good enough compared to hard chamber treatments that cost double or more. I agree with that page and statement, but if you can now get a 2.0 soft chamber for not much more money, that seems like a better option to me. You can make your own decisions on that one. Chamber convenience, phones laptopsBigger hard chambers are the most comfortable but cost a lot more. Also, hard chamber centers use an old FDA protocol that requires changing your clothes and not taking anything electrical, including a cell phone or laptop due to perceived increased risk of fire. To me, the risk is only if you are trapped in a chamber and cannot get out unless someone gets you out. The 2 instance I read about of accidents were people in hard chambers that were not able to exit and the operator was not nearby to let them out. Long story short, all chambers, hard or soft have an increased risk of fire due to compressed air at higher than 21% O2. If you breathe with a mask, O2 in the chamber will hopefully be less than 30% but “it depends”. If somehow your cell phone battery were to decide to catch fire that day (very very rare but possible), things will burn very well and very fast. If you do not know how to get out of the chamber very quickly (or maybe you’re in a hard chamber where you cannot), you are at clear risk of dying in the chamber, hence the above policy. But in a soft chamber that you can open from the inside, you can realistically get out in 20 seconds or less, so if a fire were to happen, it’s on you to just get out quickly and you should be fine. This also means you can bring a cell phone or laptop in the chamber (I would not take an old laptop with a spinning hard drive, the added pressure could potentially damage an old hard drive. All new laptops with SSD are going to be fine). Compressor and Concentrator for soft chamberThe compressor is what inflates the chamber. The soft chamber is constantly inflated with new outside air (at 21% O2) and a valve in the chamber exhausts air at a certain pressure, ensuring your chamber pressure does not get too high, as well as renewing the air in the chamber (this gets out the CO2 you exhale and also help ensure the chamber air does not reach very high numbers from the O2 that leaks from the mask into the chamber). This mostly lessens the fire risk mentioned above. The concentrator is what takes outside air, filters out anything that isn’t O2 and creates 95%-ish O2 air from regular air. The problem is outside air is 1.0 ATA and the 95% O2 air needs to be pushed in a chamber that is at 1.5 ATA or 2.0 ATA. This requires the equipment to actually filter up to twice the amount air (liters per minute) than you will breathe. It is expected that you need 7 to 10L per minute for normal breathing, but a 10Lpm concentrator will only deliver 5Lpm at 2 ATA since the air is compressed from 1 ATA to 2 ATA and the flow is therefore divided by 2. It seems that Lannx/Hugo did not take that into account and I’ve been told by one person who tested it that their 15Lpm concentrator fails to deliver even 7Lpm once you reach 2 ATA. This will not kill you, but it will fail to deliver the high O2 that HBOT is based on and will mostly negate the benefits of having a 2.0 ATA chamber. It is important to have a mask that does not leak and to make sure the flow of the concentrator is high enough. See below a screenshot of the Lannx/Hugo concentrator failing to achieve both the 95% O2 and 7Lpm+ flow it’s supposed to make at 2 ATA. I also recommend you consider a solution that includes a mask like this, mostly it will pool up extra O2 made by the concentrator and give it to you during a deep breath when you made breathe more than what is being delivered by the machine (the goal is to avoid breathing chamber air which is 30% O2 or less) 1.5 ATA vs 2.0 ATA soft chamberSo, why would you get a 1.5 ATA chamber when 2.0 ATA is now available? Mostly because they have been around longer and are more trusted to last, at least the good ones. They can be a little bit cheaper used than the 2.0 ATA chamber mentioned below. They are also a bit easier to get in and out of if you are by yourself. The Olive/AHS 2.0 ATA chamber has a lot of belts to keep it secure at that added pressure, and takes a bit more time to get in and out. Depending on the compressor, it will also take more time to reach the full pressure (meaning more time before the treatment really starts). Is 1.5 ATA enough for your treatment? I can’t say, most of those treatments are off label, which means they were not tested to have benefits at any pressure. All we have is people’s best guesses. Intuitively it seems obvious that 2.0 ATA is better in almost all cases, but maybe 1.5 ATA is likely good enough in many cases. Or maybe with 30 treatments at 1.5 ATA, you get the same as 20 treatments at 2.0 ATA. Total guess though. I personally chose to get 2.0 ATA since it’s available now. The one downside is that it’s a newer technology that isn’t multiple years old, so you are making a tiny leap of faith that it will hold up long term. Realistically a proper manufacturer has tested the chamber at higher pressures (burst point) as well as many inflation cycles, so I’m comfortable enough with that. Something else to consider is that some 1.5 ATA chambers do end up leaking eventually. Also, probably too many are sold with a concentrator that does not actually deliver 7-10Lpm 95% O2 at 1.5 ATA (too many are undersized, the mask has leaks, no rebreather bag, etc, etc…). If you get a 2.0 ATA soft chamber and run it at 1.7 ATA or 1.5 ATA, you are more likely to get a more solid chamber and a better O2 system 1.3 ATA chamber choiceUnless you get a great deal ($5000 or less) on a used soft chamber that you can get your hands on quickly, vs a new chamber that could take weeks or more than a month to get delivered, I would not bother with 1.3 ATA. Those chambers are obsolete as far as I’m concerned. This is my opinion, you don’t have to agree and I’m not interested in trying to convince you either :) If you feel happier buying a 1.3 ATA chamber, feel free. In that case do ask to see the FDA approval paperwork, not just some vague statement that chambers over 1.3 ATA are not FDA approved, which is avoiding the question I just mentioned. 1.5 ATA chamber choicesThe list below is not complete, I did not carefully compare these chambers although I had 15 treatments in Dr Antone’s Big Blue O2 since he was local to me. That chamber worked fine for me and I could recommend it. The AHS (Affordable Hyperbaric Solutions) 1.5 ATA options do also look solid and cheaper, so they are definitely worth for you to consider. I worked directly with Dr Antone and can recommend him. I also talked quite a bit with Brian Enyart from AHS to make sure he understood the engineering issues in higher pressure soft chambers and concentrators being sized correctly, and making sure 2.0 soft chambers were properly tested to be safe. So I can recommend Brian and AHS too. This is not to say there aren’t other worthwhile 1.5ATA solutions or companies, just that those are the 2 I talked to and feel confident recommending. You will note that he big blue O2 option is more expensive, but that’s the one I used for my own treatments, and it comes with 2 concentrators that deliver 20Lpm combined. That’s an extra cost of $1500 for the 2nd concentrator, but if you cheapen out on that and don’t get enough O2, breathing a much lower O2 concentrator will negate most of the benefits of the higher pressure. So basically make sure the 1.5 ATA system you get has a concentrator that is at least 15Lpm and honestly I think 20Lpm is better. This is why you may want to consider the more expensive chamber offer from Dr Antone which offers 20Lpm, but I’ll leave it up to you to get the exact pricing and make that decision. From my own research, I believe those 3 chambers should hold up at 1.5 ATA and both vendors will stand behind their product with local US warranty.

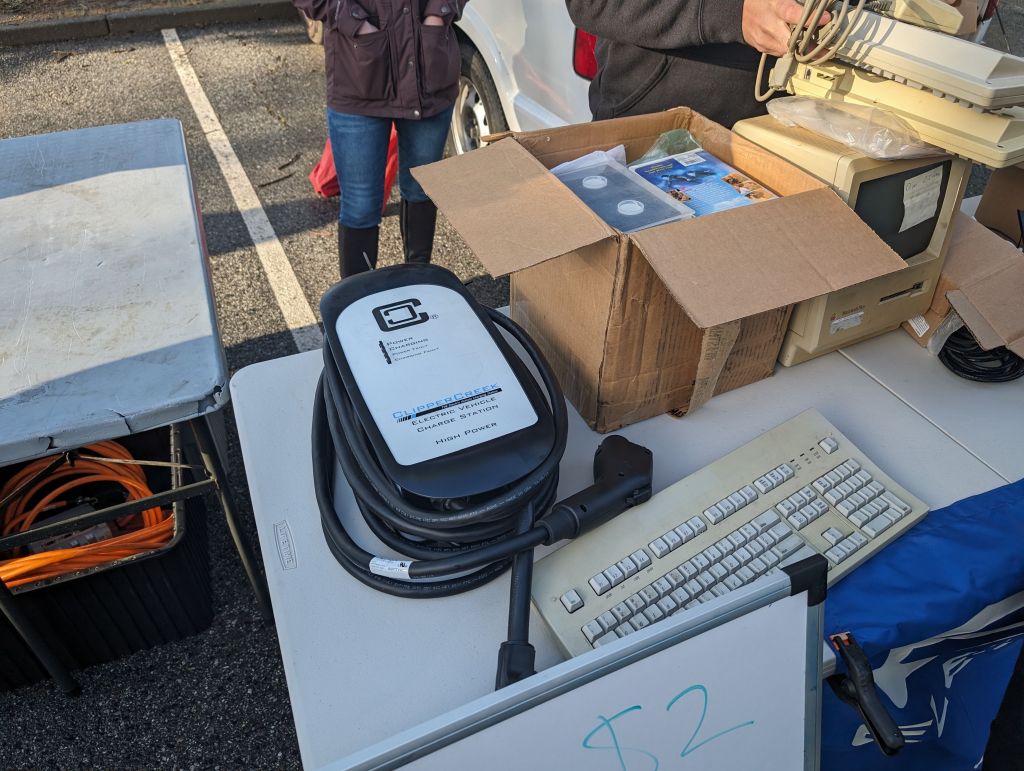

2.0 ATA chamber optionsThat said, while doing my research, it was clear that there are now 2.0 ATA soft chambers. I found at least 3 different kinds. Two are on meubon which has terrible customer reviews, so I didn’t trust that company and the last 2 are actually the same chamber sold by 2 different companies, Olive who made the chamber, and Lannx/Hugo who re-sells it. Note that each company uses a different inflator/concentrator

The last 2 chambers are the same made by Olive and sold by 2 different companies. 2.0 ATA chamber choice: Olive/AHS vs Lannx/Dr Hugo:Lannx/Dr Hugo does more advertising than Olive and has more data online, but when I dug into it, I found things that worried me. After talking to sales people from both companies, I eventually figured out that Olive is the one making it, although Dr Hugo has more aggressive advertising and more documents on their website. Note that this new soft chamber supports 2.0 ATA and was tested above that, because of its belting system. It does however mean that you have to fasten a few belts/clips when you get in and out. That’s the price for 2.0 ATA. This can be done by a single user without external help. AHS is a US distributor of Olive and offers US warranty and service. Contact AHS if you are in the US, and Olive otherwise and they’ll tell you if they direct sell to your country or have a distributor there. Dr Hugo/Lannx does sell directly from China, but if you have any warranty issues, you’ll need to work with them directly. Make sure you are comfortable with that. Lannx sent me a video of their concentrator when I asked questions, and this screenshot to tell me not to worry and everything was ok Screenshot shows only 74.5% O2 and only 3.9L per minute at 2ATA, both are absolutely inadequate, so this very much worries me and for that reason alone, I cannot recommend their U8 concentrator. When I challenged them, they said they had a U8+ that also did a supposed 15Lpm of O2 but could inflate the chamber twice as fast. As far as I can tell, that concentrator is still not enough for 2 ATA When I challenged them again, they were a bit annoyed, but eventually said they had a U9 concentrator that was 20Lpm and from what I can tell (just a guess) could be big enough to do 7-10Lpm at 2 ATA. I however noticed the shipping weight for that machine, and it was listed at 130KG (almost 300lbs). It’s huge! The price quoted from both vendors in china was not identical but similar enough, the chamber is ultimately the same, although it’s made by Olive like I said, and both companies use their own concentrator/inflator. Olive makes their own and told me they were in the business for many years. They also assured me that they totally understood my concern of delivering 95% O2 at 2 ATA and that they had tested their concentrator to indeed perform this. I was not able to verify it but I have a reasonably good feeling that they know what they’re doing. Dr Hugo/Lannx seems to buy their concentrator from another company and out of the box will ship a system that cannot deliver the O2 needed at 2.0 ATA (U8 concentrator that is rated for 15Lpm, but fails miserably in the picture above). I cannot believe they even tried to reassure me with a screenshot that actually showed the failure of their concentrator to do the job. It is a bummer as their concentrator has a fancy looking screen and controls that look nicer than the one from Olive, but then again this is worthless to me if it doesn’t actually do the job of giving me 7-10Lpm O2 at 95% in a 2 ATA environment. Last but not least, AHS has showed me pictures of badly packaged used equipment (sold as new) that was shipped to them by Dr Hugo a year ago. This is obviously bad and cause for concern too, even if they have stopped this practise today. Verdict: buy the Olive chamber for sure, and if you’re in the US, you can buy it from AHS / affordablehyperbaricsolutions@gmail.com. As of this writing, pricing is $10,700 with ground shipping which takes up to 2 months. Air shipping is $1000 more. If you live elsewhere, contact Olive and ask them if they are doing direct sales in your country or have a local distributor. Obviously a local distributor will cost a bit more, but will ensure that everything is fine and take care of warranty issues should any arise. General Buying AdviceThis page and research I did is in no way exhaustive, not even close. Since I was mostly interested in a soft chamber that could do 2.0 ATA, and could not trust 2 out of the 3 that I found, so I only had to compare two chinese sellers for the same 2.0 soft chamber, but questioning both over multiple days and figuring out who had hardware that was properly tested and who was actually making the chambers, took a while. If you’re sticking to 1.5 ATA I know there are many more options. I found 3 on the way that I listed above and I’m sure there are more. If you’re buying from Dr Antone or AHS, I believe you are in good hands and you can just trust them to have done things right (I questioned both carefully and was happy with the answers). I didn’t do price comparison shopping since it was not useful in my case, I cared about having a 2.0 chamber that would actually deliver what it was meant to do and I didn’t trust the Hugo one would. For 1.5 chambers, the prices I gave are approximate from what I found, they are not comparing apples to apples exactly, so if you are comparing prices make sure you are comparing chambers that offer the same things from functionality to reliability, and that you are confident the air system will give you 95%-ish O2 inside the chamber at enough Lpm for you to get full breaths from it and not breaths that also include chamber air. Useful questions you can ask when shopping:

|

| 2024/04/16 Learning about HBOT/Hyperbaric Oxygen Therapy | ||

|

π

2024-04-16 01:01

in Hbot

See more images for Learning about HBOT/Hyperbaric Oxygen Therapy

|