The solution was simple: just build a second mythtv box, move my main mythtv setup to the new hardware, make the old hardware a secondary frontend, and upgrade the hardware in the older PC after that. That was a good plan on paper.

So, the first part, the new PC went out ok because I used a bit of brains and threw money at the problem: I'm just too old to fuck around with PC hardware and build my own HTPC case: there are too many things that can not work together, requiring multiple trips to the store to exchange part, take stuff out and back in...

I sent a bid to microcenter, and they actually did a good job building the HTPC. I got a good enough case, was able to get drivers to talk to the front panel LCD, and effectively everything worked except the built in IR port that was hardwired to only talk to a microsoft remote (no thank you). After adding a PVR-350 and wiring its IR receiver, everything worked hardware-wise (Asus P5E-VM HDMI G35, dual core duo 3Ghz, and got the built in intel video chip to work with Xorg. The case is Antec Fusion Black 430 HTPC, which is not small but fairly nice).

Mmmh, and then:

Moving my mythtv setup to work on the new box cost me a lot of lost hair and sleep. This is where the DB in mythtv is a pain in the ass. I had to hand edit the DB to change the IP of my main mythtv server (I didn't even try anything as foolish as renaming the hostname, especially as I called my main myth server, 'myth', making a search/replace in the DB a guaranteed failure).

What happened is that I set a NULL value to my hostname and later fixed it back to be a 'NULL', except that phpmyadmin was nice enough to put the 'NULL' ASCII string instead of NULL, making debug output perplexing since it was effectively looking for NULL and not finding NULL in the DB.

Thanks to Mikal for steering me in the right direction for debugging this after about a week of pulling my hair... After that, everything was working.

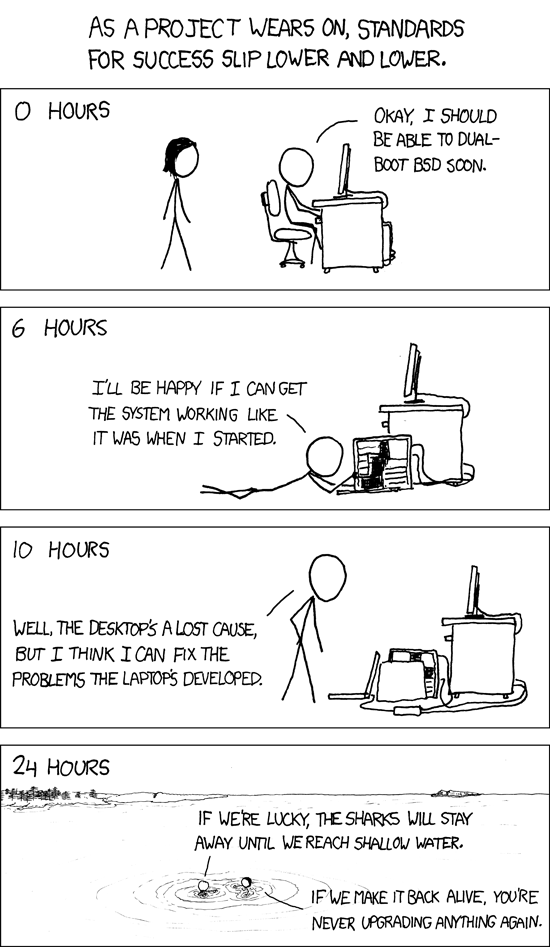

This is where a smart person would have quit while he was ahead, but no, I had that 3rd task which was to upgrade the CPU in my old myth box (an AMD Semptron 3100+). I ordered an AMD 4000+ (2.6Ghz instead of 1.8Ghz), the fastest socket 754 upgrade available for that motherboard. I hoped that it would be fast enough to decode H264/1080p, but it turned out not to be that easy to find out.

My old HTPC

The CPU of Doom

One of my many attempts at making it working: a beefier PS from my desktop PC

So the full story took over a month, but basically the new CPU has an integrated memory controller that is very subtly incompatible with my motherboard (I probably have and older bad revision of the hardware).

End result: the CPU works fine if I limit the memory in linux to 252MB. Anything beyond that and it'll crash. Lovely!

(yes, yes, I really tried everything: other memory, other slots, better power supply, memtest, standing on one foot, etc...).

And the best part, kinda? After about a month of trying I did get linux to boot and work with 252MB, and was able to verify that even overclocked at 2.8Ghz, the new CPU can't decode H264 at 1080p anyway (including with the enhanced windows software decoder you pay for).

Boy, I want my 20 hours back!